Connecting Posit Workbench (RStudio) to GitHub with HTTPS

If you're an RStudio user using Posit Workbench and want to use GitHub for source control (you should), this is the guide for you. There are two ways...

4 min read

Stewart Williams

Nov 2020

Stewart Williams

Nov 2020

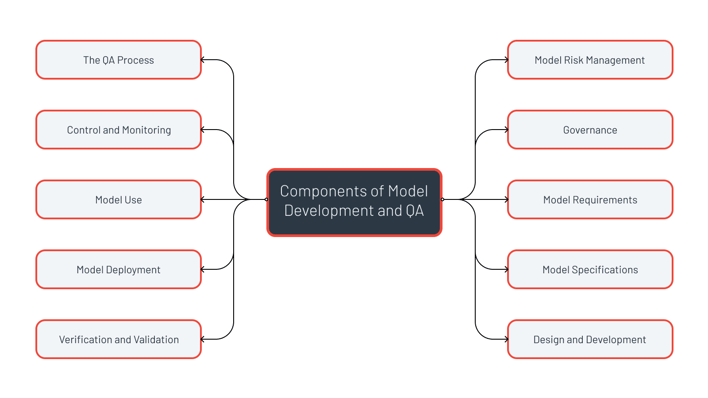

While talking to one of my colleagues recently, she challenged me to put down in a mind map all the things I thought organisations needed to consider when performing analytical modelling QA.

I accepted the challenge, and my resulting mind map was detailed to say the least, covering many aspects of analytical model development, governance and use.

I’ve distilled these down into the ten most important components that organisations need to manage when considering how they can put a rigorous analytical model development and QA process in place.

The US Federal Reserve’s ‘SR 11-7: Guidance on Model Risk Management’ defines model risk as “the potential for adverse consequences from decisions based on incorrect or misused model outputs and reports”. It’s a good definition.

While sophisticated processes are already in place in the Finance Sector, model risk must be formally and proactively managed by any organisation that relies on analytical models in decision-making.

The level of Quality Assurance required for a model depends on both the level of model risk and the complexity of the model.

To effectively implement analytical model risk management, processes for model governance are essential.

Roles, responsibilities, and standards for model development and assurance processes should be well defined (at both the organisational and project levels). Each model should have a designated ‘owner’ who takes responsibility for it throughout its lifecycle and has the task of confirming it is fit for purpose.

Organisations should understand the overall level of model risk they face. A model risk register can be used to provide an overview of models in terms of their risk and their complexity, documenting assurance activities and helping auditors ensure processes are followed.

Model requirements represent the model from the perspective of the user, or the organisation as a whole.

Most models have some level of documentation for their functional requirements, whether these be data requirements, technical requirements or stipulations as to how a business process might be supported.

While often neglected, this stage must also encompass non-functional requirements, e.g. usability, interoperability (with other software, systems, models), security, understandability/transparency, maintainability.

The requirements stage must also stipulate the delivery timescales – often it is better to have a simple model delivered quickly than a complex model later.

The Model Specification represents the model from the perspective of the modeller/developer.

In this step it’s important to answer the fundamental questions about the model itself:

These specification items need to be linked to model requirements, enabling traceability and facilitating validation and verification processes.

In this stage you need to define in detail exactly how the model will be put together. You need to:

To future proof the QA process, design documentation should allow someone else (with the necessary skills) to take over development/maintenance of the model and provide traceable routes back to the model specification.

Verification is the process of determining that a model implementation accurately represents the model specification.

Validation can be considered from two different perspectives:

The verification and validation stage should address both functional and non-functional requirements.

How a model is to be eventually deployed needs to be considered very early on in the requirements stage. If a model is for internal use within an organisation, this may be fairly straightforward.

Consideration should be given to IT permissions necessary to install the model in first place, what tools need to be on the user’s PC, etc.

When a model is to be released to the public (as some of our clients require) then there can be a range of implementation environments. This extends the need for deployment testing, which increases costs and turnaround time. This is something that needs to be built-into the plan from the outset.

In many organisations the model users are not necessarily the model developers. It is important that users, both at present and in the future, can use a model correctly and understand its features and limitations.

At a minimum, some sort of user documentation (or help file) is a necessity, but more substantive user training may be required. This could cover both use of the software and effective use of the model:

You’ll also need to consider what user support may be necessary over the entire model lifecycle, not just in the immediate term.

Control and Monitoring is required both during development and use. Examples include:

Quality Assurance is fundamental to managing analytical model risk. It validates the model development and management processes. It also assures that the type of activities referred to in this infographic are being performed in practice. It results in recommendations as to what improvements can be made.

Though these components are described above as if occurring in sequence, this is rarely the case. One challenge in putting together an iterative approach to model development, for example, is how these components are distributed and addressed across the iterations. More on that in another post.

Learn more about about our Analytical Model Testing and Quality Assurance services.

If you're an RStudio user using Posit Workbench and want to use GitHub for source control (you should), this is the guide for you. There are two ways...

Many companies investing in data analytics struggle to achieve the full value of their investment, perhaps even becoming disillusioned. To understand...

We are cursed to live in interesting times. As I write this, a war in Ukraine rumbles on, we sit on the tail of a pandemic and at the jaws of a...