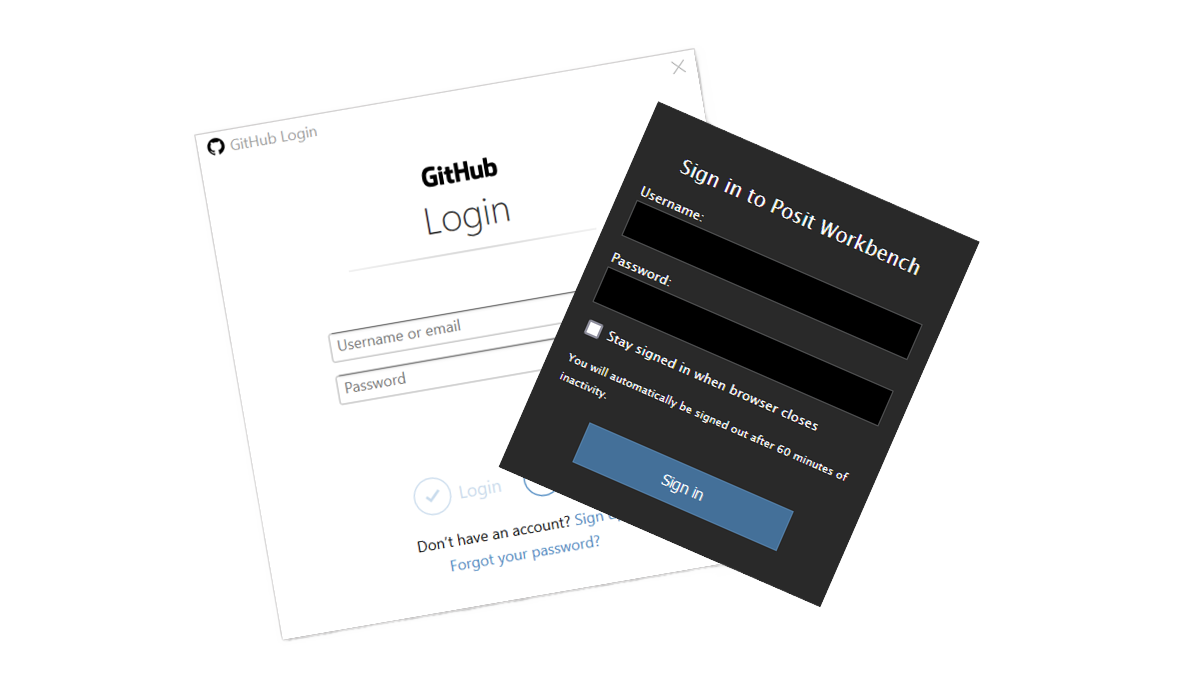

Connecting Posit Workbench (RStudio) to GitHub with HTTPS

If you're an RStudio user using Posit Workbench and want to use GitHub for source control (you should), this is the guide for you. There are two ways...

2 min read

Dr. Mathew Davies

Apr 2020

Dr. Mathew Davies

Apr 2020

Managers rely on analytical models to inform difficult decisions: where to build that next hospital; how to allocate resources during the next budgetary cycle; whether performance is likely to meet targets; what the fall-out might be from a proposed or feared change; and whether that business case really warrants funding.

In applications such as these (and a thousand others) business leaders expect models to provide reliable answers to business-critical questions within tight timescales. Should a model fail, especially at short notice, the repercussions for the host organisation can be traumatic.

Models can fail in a spectacular number of ways, especially when captured in software: at one extreme, the model may simply refuse to run. At the opposite extreme, a model may generate corrupt results without any obvious sign of a problem.

Countless authors have dealt with the software side of these problems but far subtler modes of failure are possible, manifesting themselves in symptoms including:

Model failures can usually be traced back to one of the broad categories of causes:

One frequent contributor to model failure is inadequate documentation; another is staff turn-over. When both of these things occur simultaneously, the result can be an orphaned model, i.e. an undocumented model that has been abandoned by its developers.

Despite incessant cajoling and warnings, many analysts still fail to write comprehensive documentation for their models. In the short-term, gaps in the documentation can be papered over by verbal briefings from the team developing the software. However, as the original team members move on and the model is gradually extended or updated, so detailed knowledge of the overall model drains away.

The result is a maintenance team whose members’ collective knowledge of the orphaned analytics model leaves much to be desired.

On the day that the orphaned analytical model fails, it transpires that no-one is quite sure how it performs certain calculations; no-one knows what the model has assumed about condition A or constraint B; no-one knows how to alter the model in order to answer the customer’s latest query; no-one’s quite sure of the knock-on effects that altering one part of the model might have on all others; and there’s a distinct absence of analysts offering to help out.

It’s a better idea to prevent a model becoming orphaned in the first place than to deal with the consequences at a later date. Here are three obvious – yet oft-ignored – preventative measures:

As business leaders become ever more reliant upon analytical models to inform major organisational decisions, they need to be well prepared to deal with models that fail. If you rely on decision-making models, then solid knowledge management and succession planning from the outset is essential. Analytical model testing and quality assurance processes are key.

For more on what to do if your model fails and how to prevent it, read my next blog posts in this series, out within the next couple of weeks.

If you're an RStudio user using Posit Workbench and want to use GitHub for source control (you should), this is the guide for you. There are two ways...

Many companies investing in data analytics struggle to achieve the full value of their investment, perhaps even becoming disillusioned. To understand...

We are cursed to live in interesting times. As I write this, a war in Ukraine rumbles on, we sit on the tail of a pandemic and at the jaws of a...